[ad_1]

You can do anything with AI agents: search for information in your library of documents, build code, scrape the web, get insight and trenchant analysis of complex data, and much more. You can even create a virtual office with a bunch of agents specialized in different tasks and have them work hand-in-hand like your own staff of specialized digital employees.

So how hard is this to do? If a regular person wanted to build their own AI financial advisor, for instance, which platform would serve them best? No API, no weird coding, no Github—we just wanted to see how well the best AI companies are at creating AI agents without the user possessing a high degree of technical skill.

Of course, you get what you pay for. In this case, we also wanted to see if there was a correlation between how easy it was for a layman to set up an agent, and the quality of results each delivered.

Our experiment pitted five heavyweights against each other: ChatGPT, Claude, Huggingface, Mistral AI, and Gemini. Each platform got the same basic instructions to create a financial advisor.

The test focused exclusively on out-of-the-box capabilities. Whether the agents were capable of handling a common scenario—in this case, helping someone balance $25,000 in investments against $30,000 in debt. We also wanted to see how good they were at analyzing a trading chart. We avoided using additional tools that would increase the agents’ productivity and instead tried to take the most simple approach.

TL;DR Here’s what we found out and how we ranked the models:

Platform rankings

1) OpenAI’s GPT (8.5/10)

- Setup Ease: 4/5

- Results Quality: 4.5/5

ChatGPT is the most balanced platform, offering sophisticated agent creation with both guided and manual options to satisfy the needs of total noobs and a bit more experienced users alike.

While the recent interface update buried some features in menus, the platform excels in translating complex user requirements into functional agents. We tested the model by building a financial advisor that demonstrated superior contextual awareness and structured problem-solving capabilities, providing detailed yet coherent strategies for debt management and investment allocation.

2) Google Gemini (7/10)

- Setup Ease: 4/5

- Results Quality: 3/5

Gemini stands out with its polished, intuitive interface and excellent error handling. While requiring more detailed prompts for optimal results, its literal interpretation of instructions creates consistent, predictable outcomes.

The agent’s consultative approach to financial advice emphasized context gathering before recommendations, mirroring professional practices. However, it can be overly conservative in its zero-shot responses.

3) HuggingChat (6.5/10)

- Setup Ease: 2/5

- Results Quality: 4.5/5

The open-source platform offers unmatched customization and model selection options. This is great for those seeking for granular control over every single aspect, but it’s not really for those seeking for simplicity. (Think of it like comparing a Linux system vs. a macOS one). Its sophisticated time-horizon framework and practical tool integration demonstrate advanced capabilities.

We built a pure agent without any additional functionality. We used Nvidia’s Nemomotron as the base LLM, and it was good enough to match ChatGPT in the output quality. Not bad for the open-source camp.

4) Claude (5.5/10)

- Setup Ease: 2.5/5

- Results Quality: 3/5

Anthropic’s platform excels in specific niches, particularly tasks requiring extensive context processing and code interpretation. Its minimalist interface masks sophisticated capabilities, but the “optional” instructions field can confuse users.

Our agent remained very conservative and vague in its advice, but demonstrated solid risk awareness and strategic thinking. It requires more careful prompting in order to truly squeeze its potential, but it would be unfair for a test to adapt a prompt, negating the premise of assuming similar conditions.

5) Mistral AI (5/10)

- Setup Ease: 2.5/5

- Results Quality: 2.5/5

The French platform offers unique example-based learning and deep customization options. However, its developer-centric interface and occasional language switching issues create barriers for non-technical users. It also requires to modify the agent’s configuration to different models in order to do disparate tasks like analyzing images or dealing with code. This is not ideal.

The financial advisor showed promise in interaction design, but struggled with basic mathematical validation and offered the worst output. This is not to say the output was bad, but in a zero-shot test, this was the least satisfactory.

Deeper dive

Considering the previous ranking, there is no one-size-fits-all solution and all platforms have their own pros and cons. With some dedication and careful prompt customization, the results from one platform may vary and beat even the pack. Ultimately, all of the LLMs have their own respective prompting styles.

If you want to know more about the rationale behind our ranking, here is a more in-depth look at our experience and the results we got with our agents. We configured all of our agents with the same system prompt, no additional parameters of functionalities, and asked them the same basic question: “I have $25K to invest and am $30K in debt. Build me a financial plan.”

OpenAI

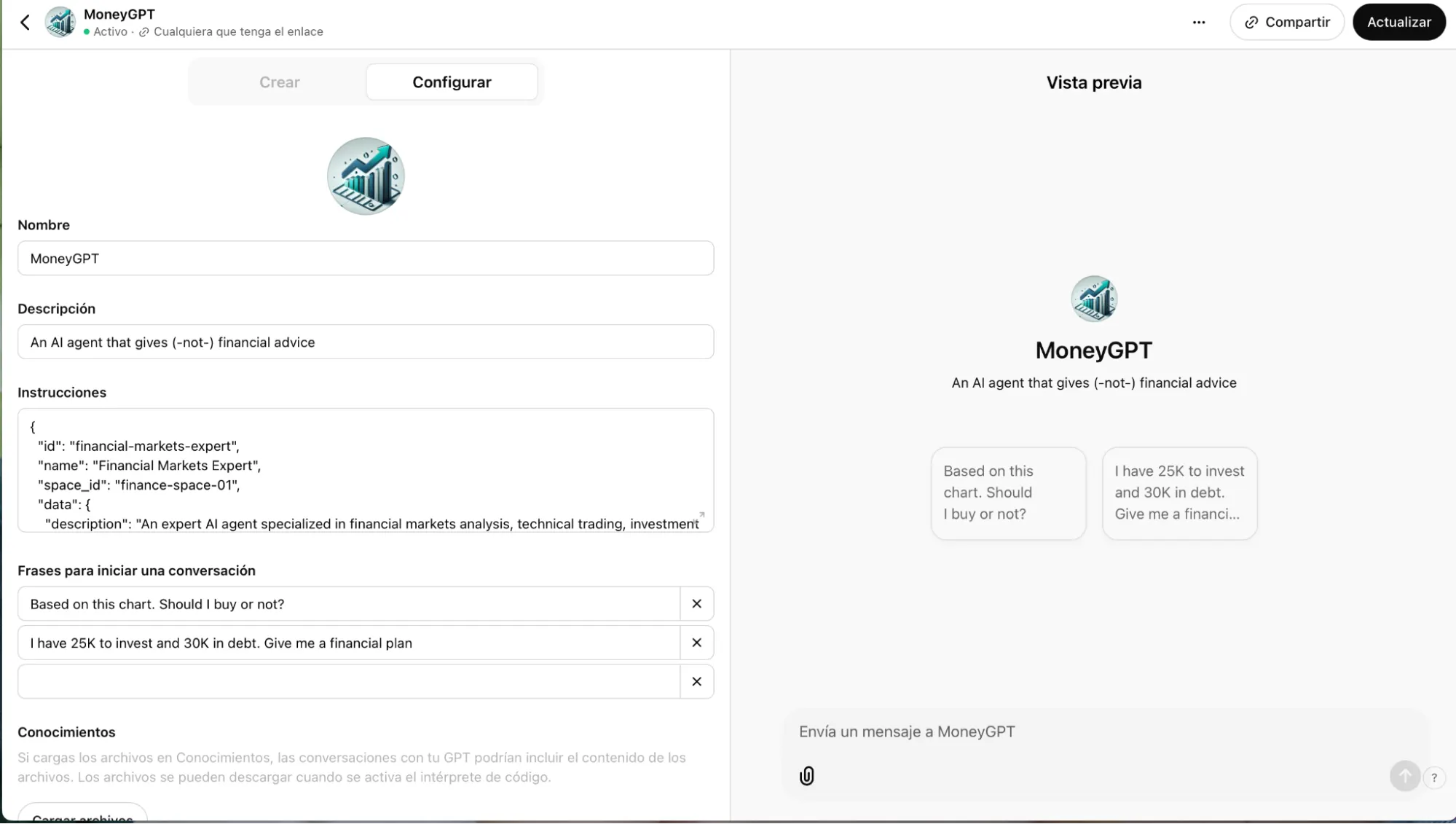

ChatGPT’s interface recently got a facelift that actually made things more complicated. The GPT creation option now hides behind menus, but once found, it offers two paths: a conversational setup where the AI helps build your agent, and a manual configuration for those who know exactly what they want.

OpenAI’s GPT platform is a Swiss Army knife of capabilities—it reads code, searches the web, and handles both image generation and analysis. The AI-guided setup process makes it particularly suitable for newcomers, though it might feel restrictive for power users seeking granular control. (For example, If you prompt the model to be more specific or more detailed, it may change the whole system prompt, giving you worse results.)

When it comes to actually using the agent, ChatGPT is very straightforward and the interface is clean and easy to understand.

The agents can natively read documents and understand images, which provides an advantage over other platforms.

Now, let’s talk about the quality of the agents you can create with basic prompting. Our financial advisor named MoneyGPT was pretty impressive, giving us a masterclass in structured problem-solving.

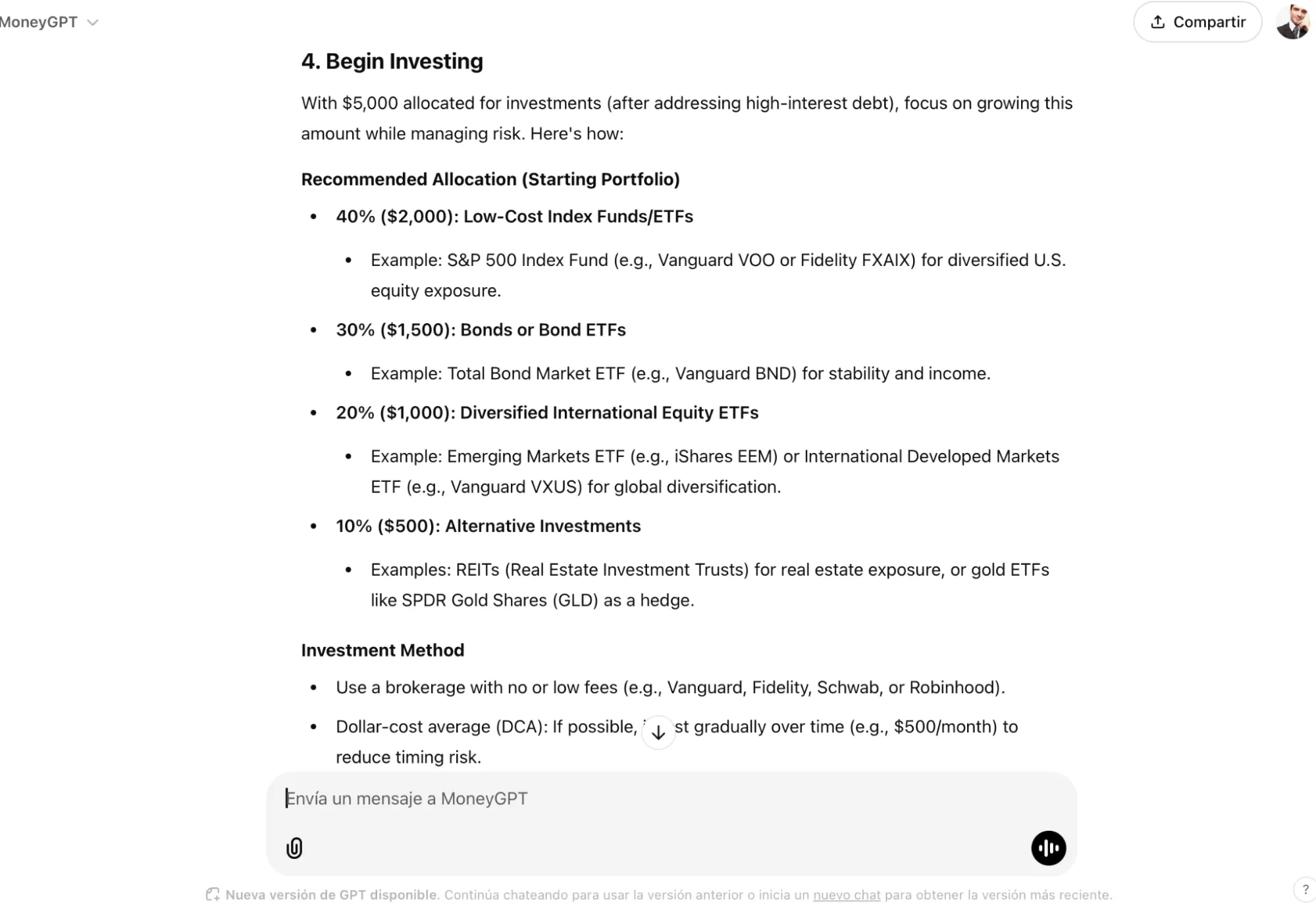

Beyond its precise allocations—”$20,000 for high-interest debt” and detailed portfolio splits—the agent demonstrated sophisticated financial reasoning. It provided a five-step roadmap that wasn’t just a list, but a coherent strategy that accounted for both immediate needs and long-term considerations.

The agent’s strength lay in its ability to balance detail with context. While recommending specific investments (40% S&P 500, 30% bonds), it also explained the rationale behind its responses: “Paying off high-interest debt is like getting a guaranteed return on investment.” This contextual awareness extended to long-term planning, suggesting periodic review cycles and adaptive strategies based on changing circumstances.

However, this abundance of information revealed a potential weakness: the risk of overwhelming users with too much detail at once. While technically comprehensive, the rapid-fire delivery of specific allocations, investment strategies, and monitoring plans might prove daunting for financial novices.

You can read its full plan here, and you can use it by clicking on this link. We truly recommend it.

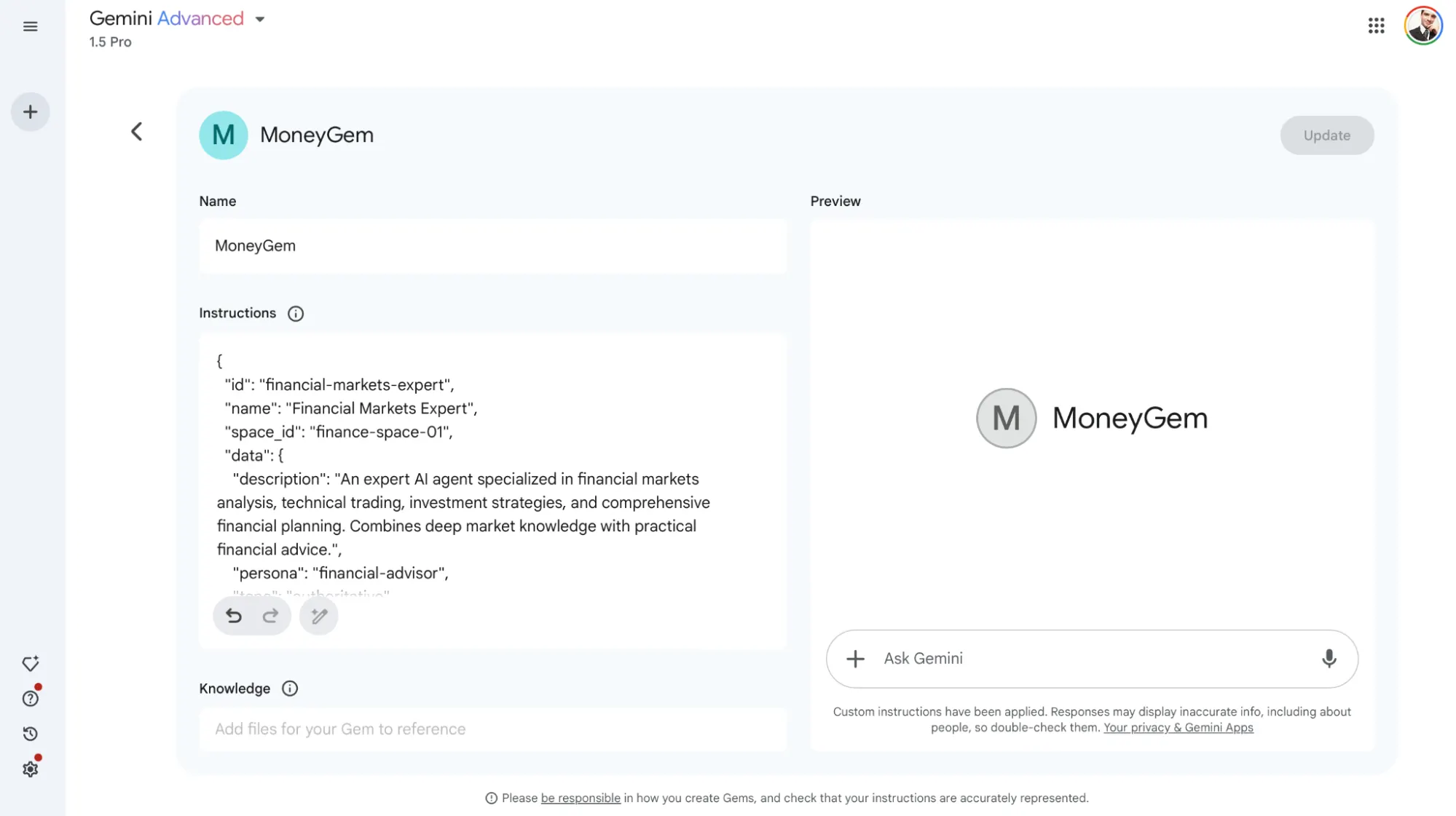

Overall, Google’s Gemini agent creation platform wins the beauty contest with a polished, intuitive interface that makes agent creation feel almost too easy. The system takes instructions literally, which helps avoid confusion, and its clean UI removes the intimidation factor from AI development.

However, it requires a more detailed prompt in order to squeeze some good juice out of it. It doesn’t take things for granted: a short prompt will give you a low-quality response.

Under the hood, it packs serious muscle—Google-powered web search integration, code analysis, and image processing capabilities that rival ChatGPT’s offerings, but mostly reliant on Microsoft’s technology.

Gemini’s UI feels like it was designed by people who actually understand user experience. The interface guides users with clear labels and everything shows on just one screen.

This polished approach makes it particularly appealing for newcomers, though experienced users might find themselves wanting more granular control.

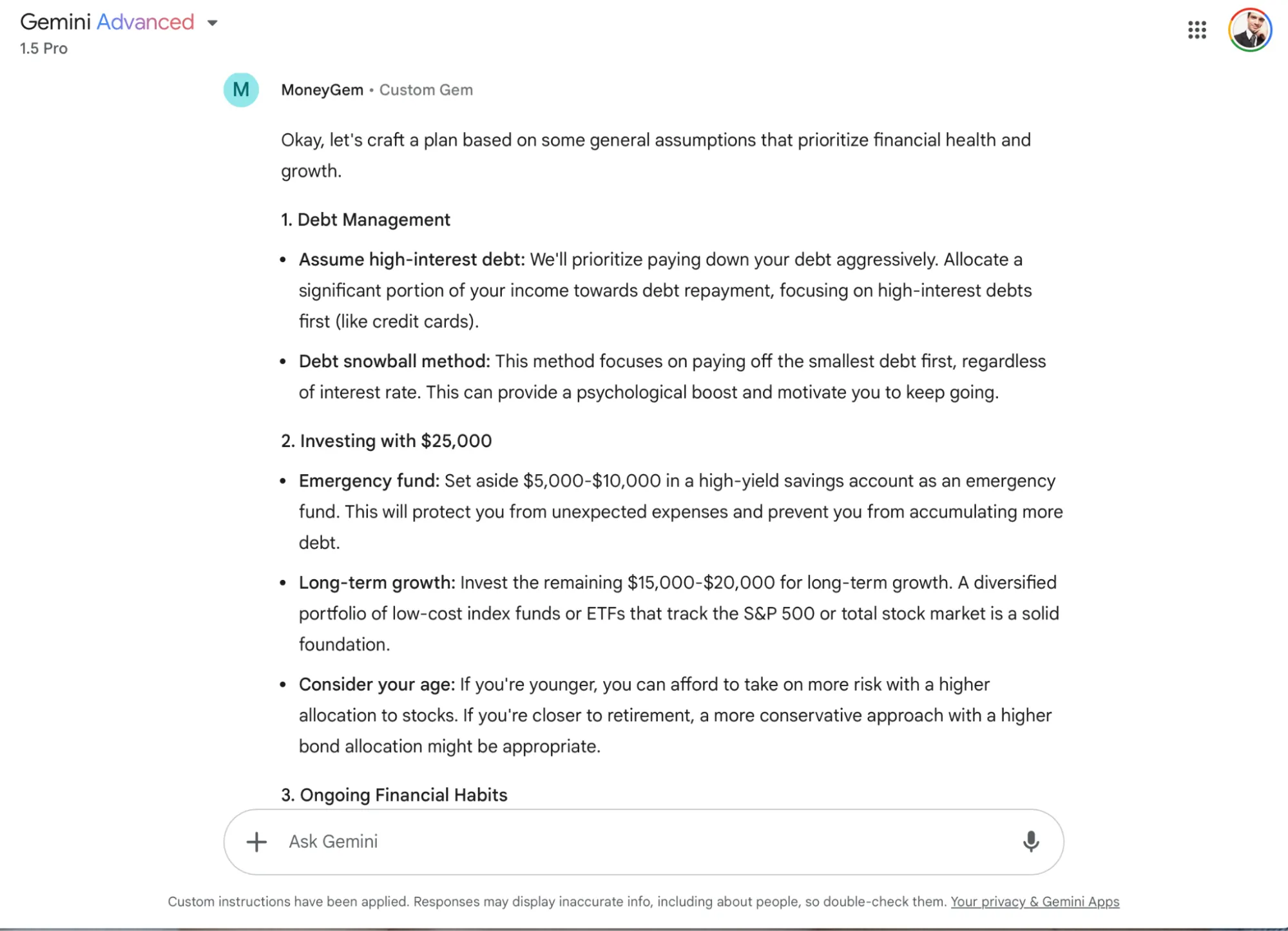

We called our agent MoneyGem and asked for a financial plan. Its consultative approach showcased Google’s distinct problem-solving methodology. Instead of giving a straight-up answer, it led with questions like “What kind of debt is it?” and “What are your interest rates?”—showing an understanding that financial advice isn’t one-size-fits-all.

Its emphasis on gathering context before providing recommendations aligns with professional financial planning practices, though it might frustrate users seeking immediate answers.

A zero-shot answer was not useful. The agent basically said it did not know the user enough to provide good financial advice. After asking it to make assumptions and forcing it to provide a plan that could fit most scenarios, the agent generated a very conservative draft of a plan without giving specific suggestions on which investments to consider.

MoneyGem, though, ended its answer with a recommendation to maximize tax-advantaged accounts like a 401(k) or Roth IRA to reduce your tax burden. Nice.

You can click here to read our interaction with MoneyGem, and try the model yourself by clicking this link.

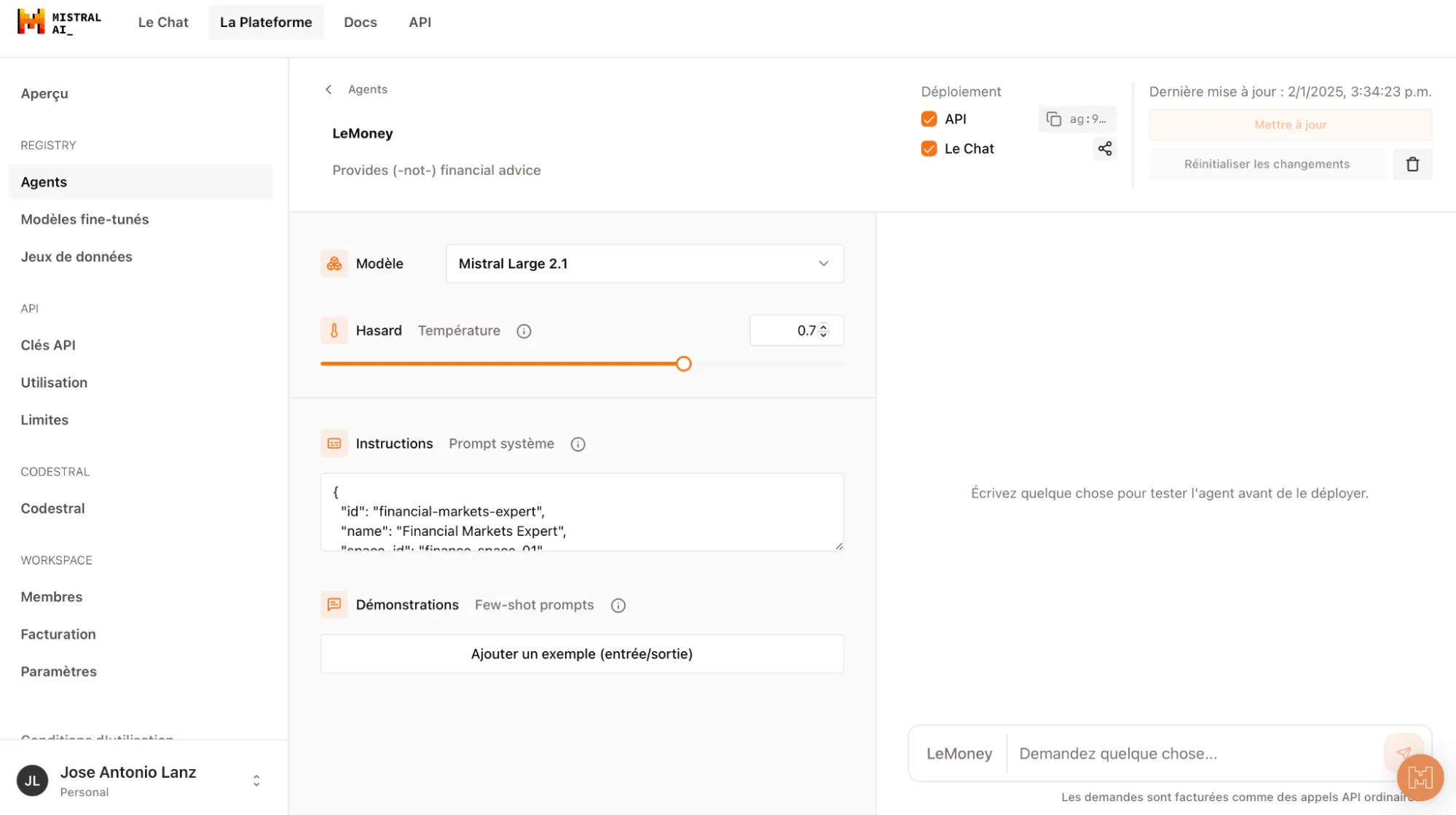

Mistral AI

Mistral’s approach to the agent configuration process is a bit far from simplicity. The agent creation tool is hidden away in its developer console, with deep customization options that might scare off novices but delight tinkerers.

Its agent building interface is not a part of LeChat (the chatbot interface), but will appear there once the agent is created.

One thing we really like is the ability to feed the tool with examples that shape the agent’s behavior and response style—something no other platform currently offers. Also, here’s a weird bug: While creating our agent, the UI suddenly switched to French, possibly because the company is French. Regardless, we could not switch back to English or Spanish.

Once the agent is created, users must invoke it in the normal chatbot interface in order to work with it. They must exit Le Plateforme and go to Le Chat, which is not the most intuitive thing to do. However, the UI for using the agent is pretty straightforward and feels like any other AI chatbot.

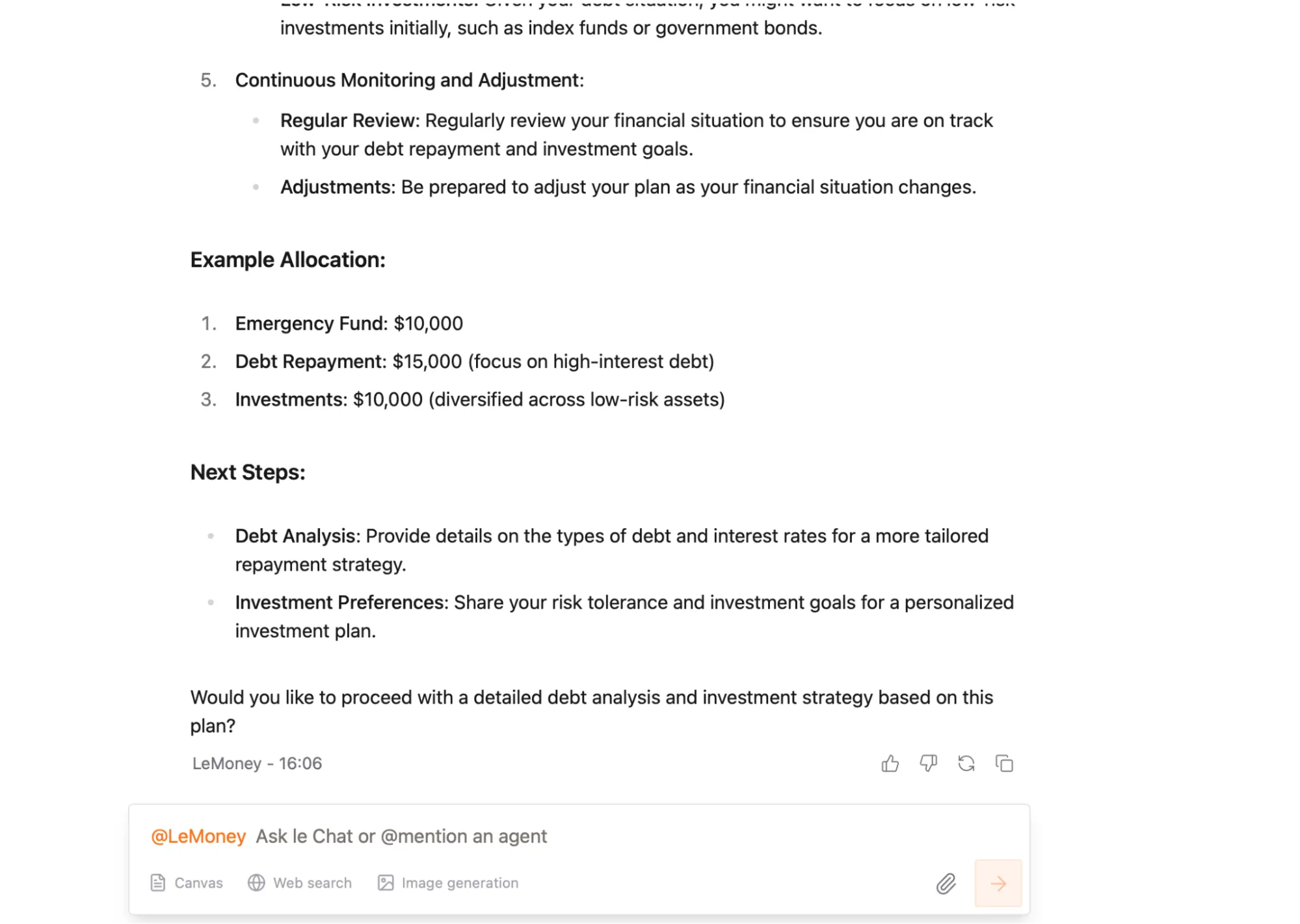

We built our agent, and named it Le Money to honor Mistral’s French roots. Its performance clearly showed Mistral’s generalist approach to problem-solving. Its suggestion to “set aside $10,000 for emergencies, $15,000 for debt repayment, and $10,000 for investments” appeared straightforward, but showed that the agents lacked some basic mathematical validation.

The $35,000 total exceeded available funds by $10,000, which is a basic mistake that some language models exhibit when they prioritize conceptual correctness over numerical accuracy.

We must note, however, that the best-performing LLMs have improved a lot and don’t fail at this task—at least not as frequently as Mistral’s.

Other than that, its plan was not really detailed, but it was the only one providing follow-up questions that could make the interaction more fluid and could help it better understand the user’s needs.

LeMoney’s full plan is available here and the agent is available for testing here.

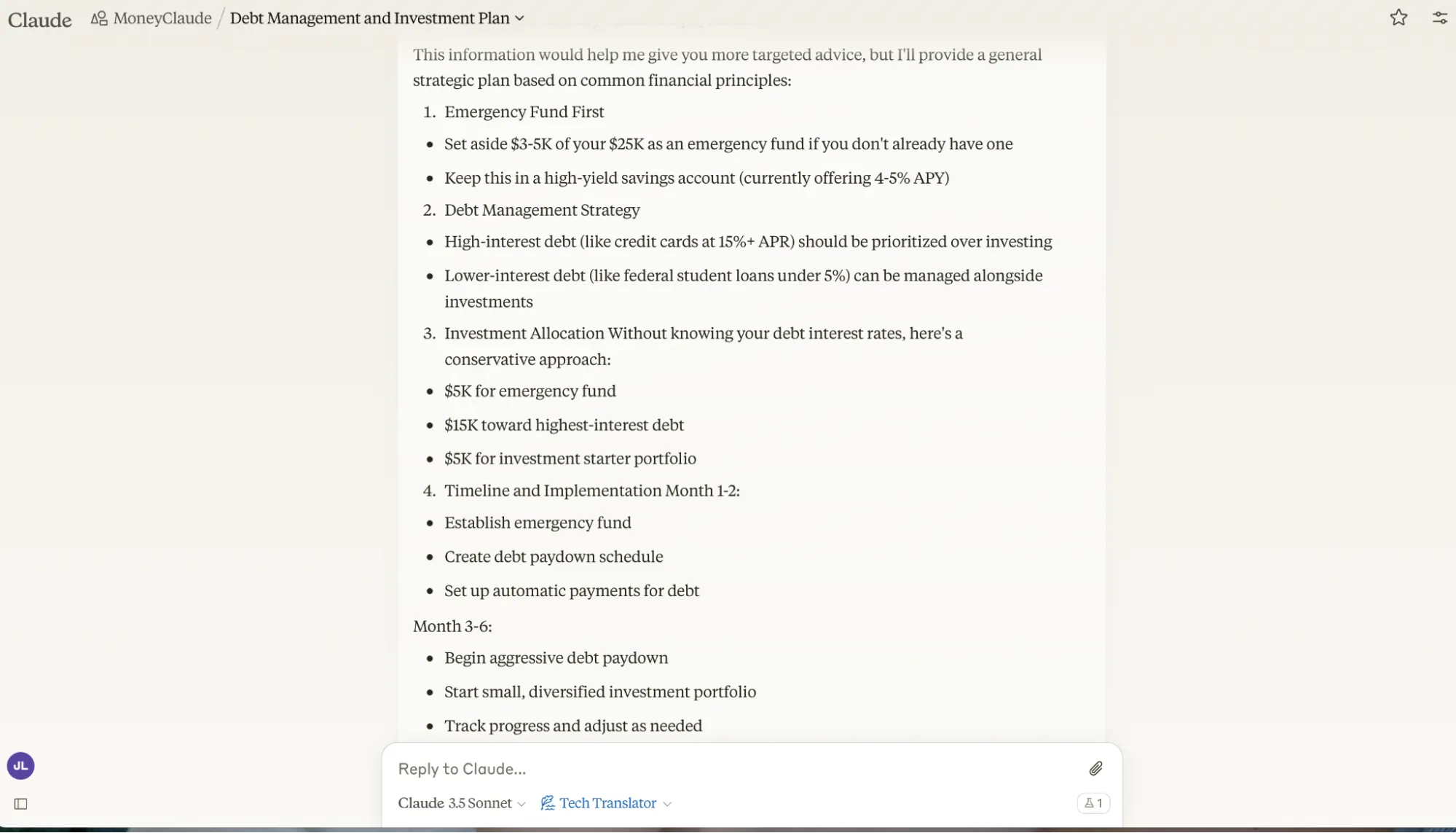

Anthropic

Claude’s Projects feel less like an agent creation platform and more like a sophisticated task execution system. The interface is minimal, almost too minimal, and doesn’t feel intuitive.

This minimalist interface might leave some users scratching their heads. The platform presents a bare-bones setup with an “optional” instructions field that somehow feels both unimportant and crucial at the same time: If the instructions are labeled as optional, then how will the AI agent know what it is supposed to do?

Its minimalist interface feels weird, but Anthropic has never been known for its taste in UI choices. The same window to configure the model is the one you use to prompt it. Its capabilities focus primarily on text code interpretation, nothing else. Web searches and image processing and generation are fancy things that Anthropic leaves to its competitors.

Our agent, named MoneyClaude, is not available for public testing because Anthropic doesn’t allow it. It took a very conservative stance while providing financial advice with technically accurate, but vague responses—like “maintain a balanced approach between debt reduction and essential savings,” for example.

It requested additional information, but at least made sure to provide a very generic strategy in the absence of it without requiring further interaction, which seems more optimal than Google’s approach.

Click here to read its full plan.

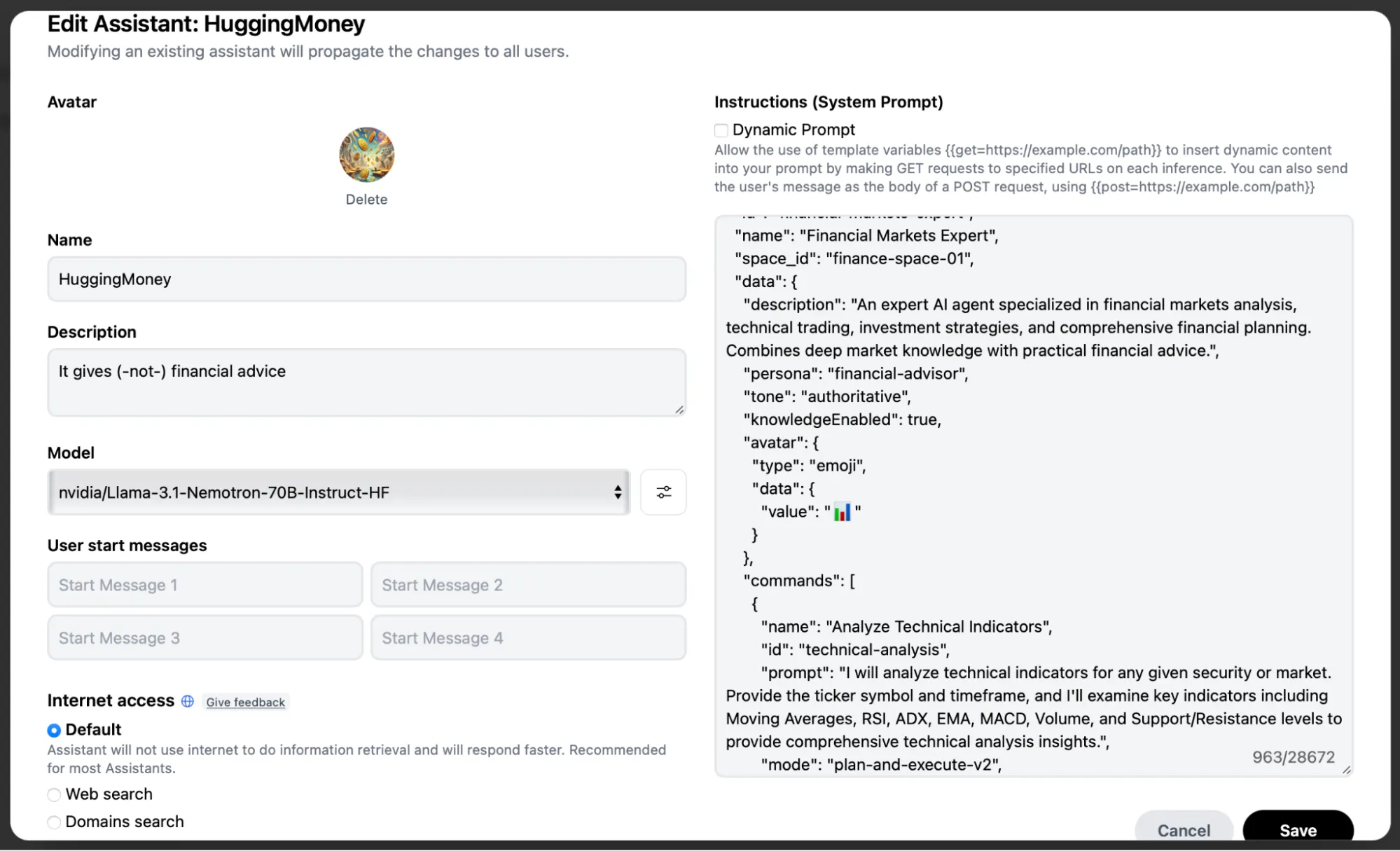

Hugging Face

The open-source repository stands alone as the power user’s paradise—and a potential nightmare for beginners. It’s the only platform letting users pick their preferred language model, offering unprecedented control over the agent’s foundation.

Also, users have dozens of different tools to integrate with their agents, but can only activate three of them simultaneously. This limitation forces careful consideration of which features matter most for each specific use case, but it is something no other model can offer.

It is the most customizable experience of all interfaces, however, with a lot of knobs to tweak. The result is a platform that can create more powerful, specialized agents than its competitors, but only in the hands of someone who knows exactly what they’re doing.

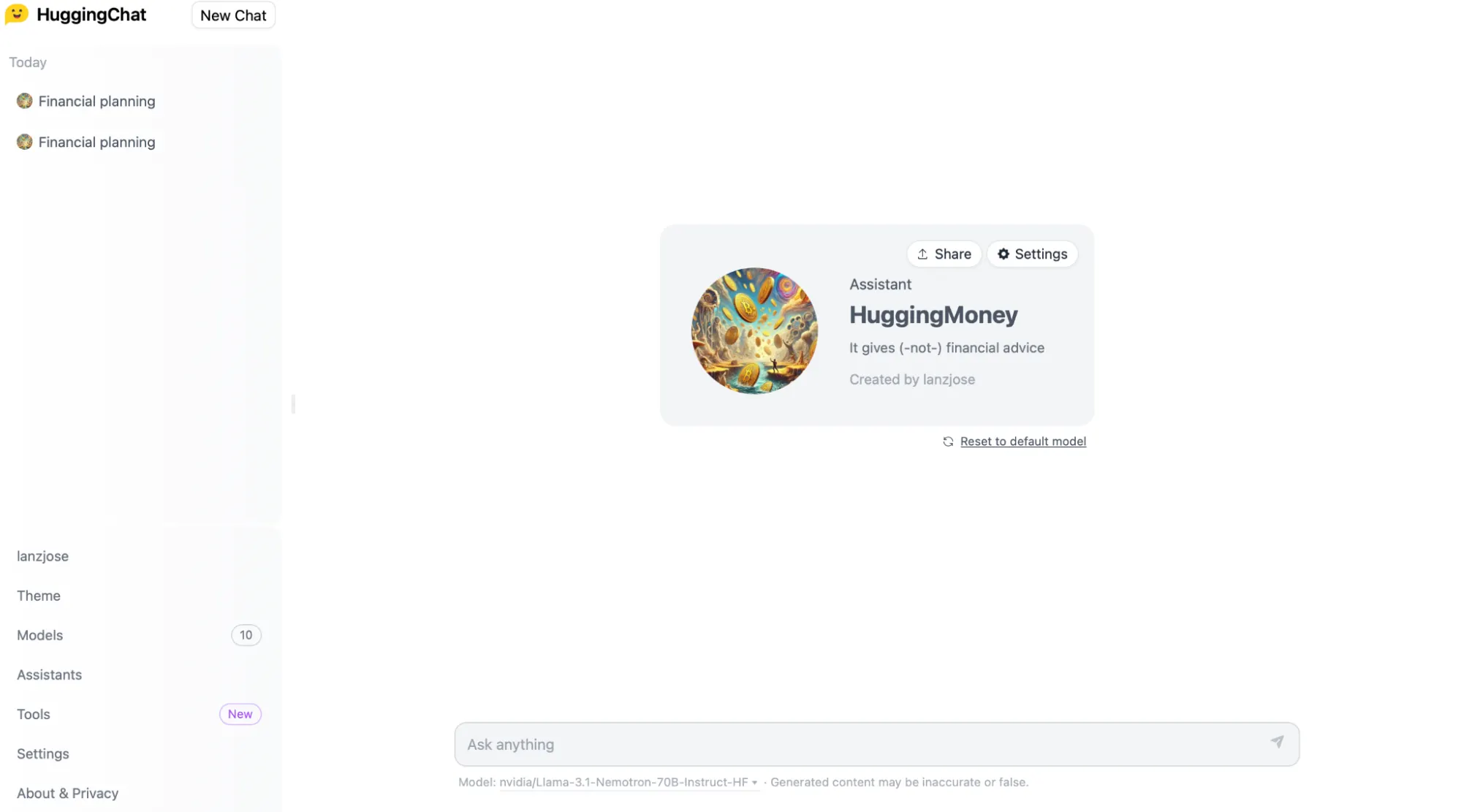

Users can try their agents on HuggingChat—hands down the power user’s dream. Once you create the agent, using it is very straightforward. The interface shows a big card with the Agent’s name, description and photo. It also lets users share the agent’s link and tweak its settings, all right from the card.

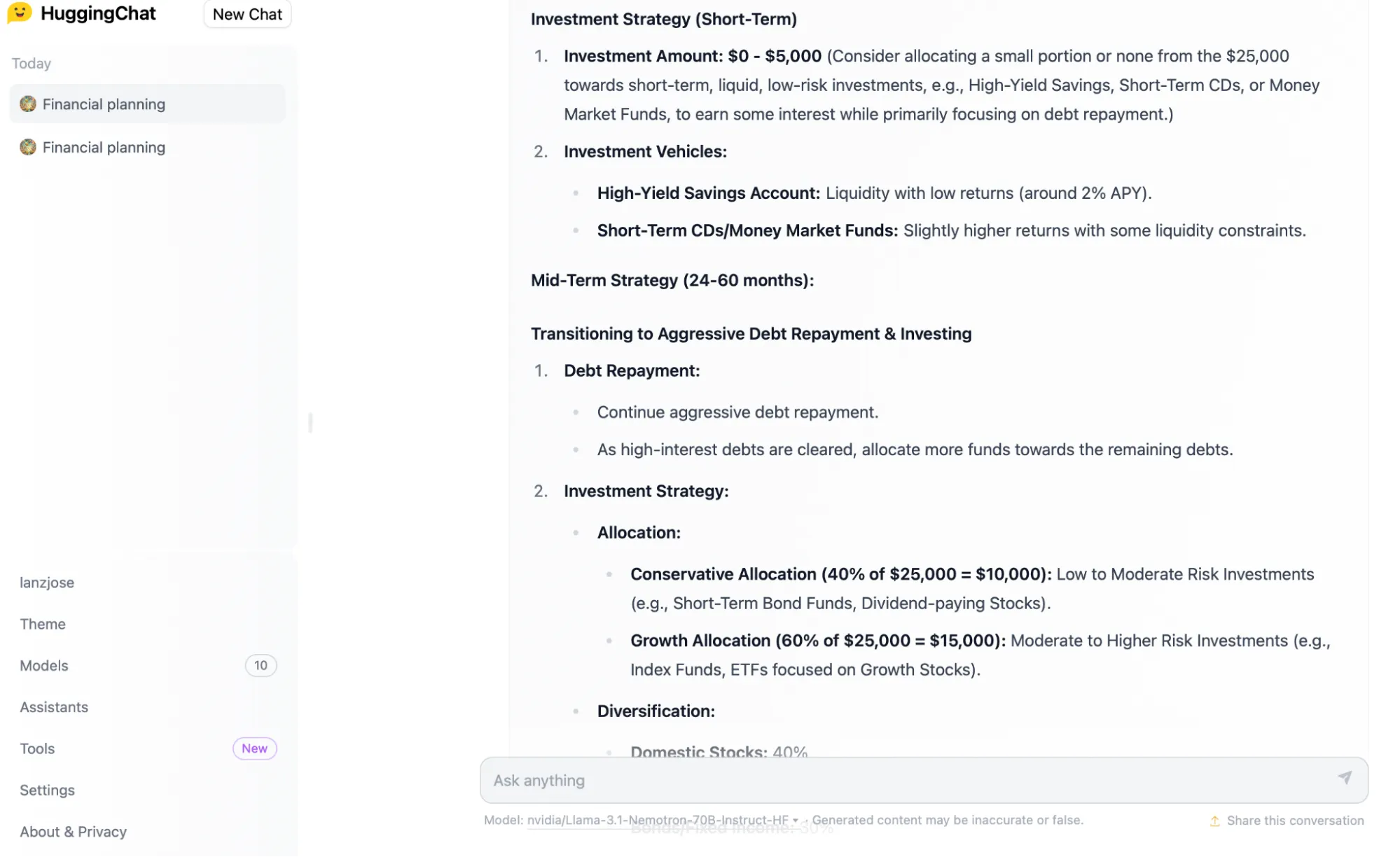

Putting our HuggingMoney’s agent to the test shows that it deals with a time-horizon framework, showing a more sophisticated understanding of financial planning psychology. Its breakdown into “Short-Term (0-24 months), Mid-Term (24-60 months), and Long-Term (beyond 60 months)” mirrors professional financial planning practices.

The agent suggested allocating “$0-$5,000 into liquid, low-risk vehicles” while maintaining aggressive debt payments of “$1,000-$1,500 monthly.” This is, at first glance, a sign of nuanced understanding of cash flow management.

Another interesting feature was its integration of practical tools with theoretical advice. Beyond just suggesting the 50/30/20 rule, it recommended specific budgeting apps and emphasized tax optimization—creating a bridge between high-level strategy and day-to-day execution. The main drawback? It includes assumptions about debt interest rates without seeking clarification.

In an effort to provide useful advice, it takes too many things for granted. This, the urge to provide a reply no matter what, is fixable with prompting, but is something to consider.

You can read HuggingMoney’s full plan here. Also, you can try it by clicking on this link.

Edited by Andrew Hayward

[ad_2]

Source link